Enabling Technologies for Affordable, Accurate and Ubiquitous motion capture and visualization

- Work with low-cost devices

- Release potential using AI and data

- Promote accessibility and convenience

We aim at developing technologies which Enable affordable, low-cost devices for accurate and reliable motion capture and visualization. By using on the data that are collected in this lab as the ground truth, we work to develop AI filters to boost the performances of these devices.

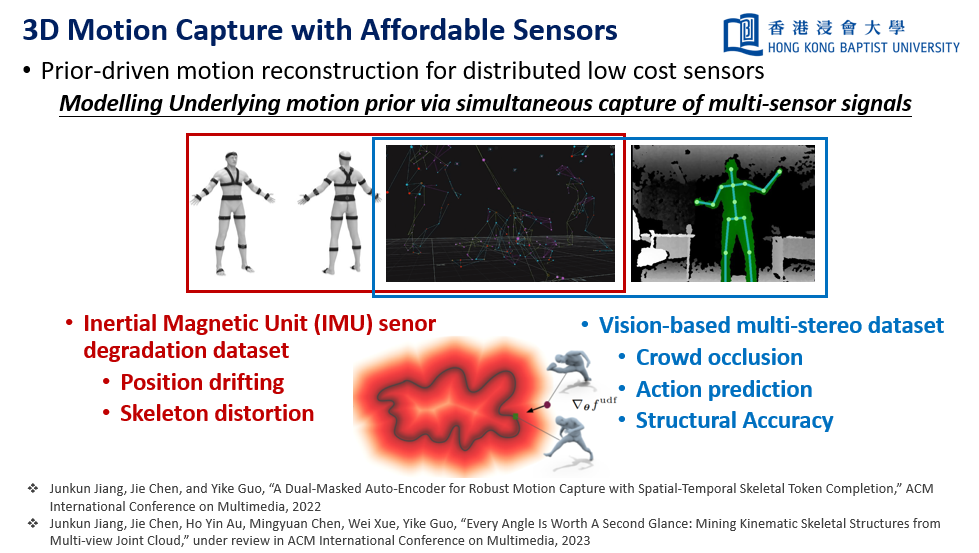

For example, we are building a IMU sensor degradation dataset for the inertial magnetic sensors, and a multi-stereo dataset for normal RGB cameras. With well-aligned coordinate system between these sensors and our high precision mocap system, we can model the degradation pattern, which will help us to alleviate intrinsic sensor errors such as drifting, distortion; and develop intelligent solvers to address challenges caused by occlusion in crowded social venues.

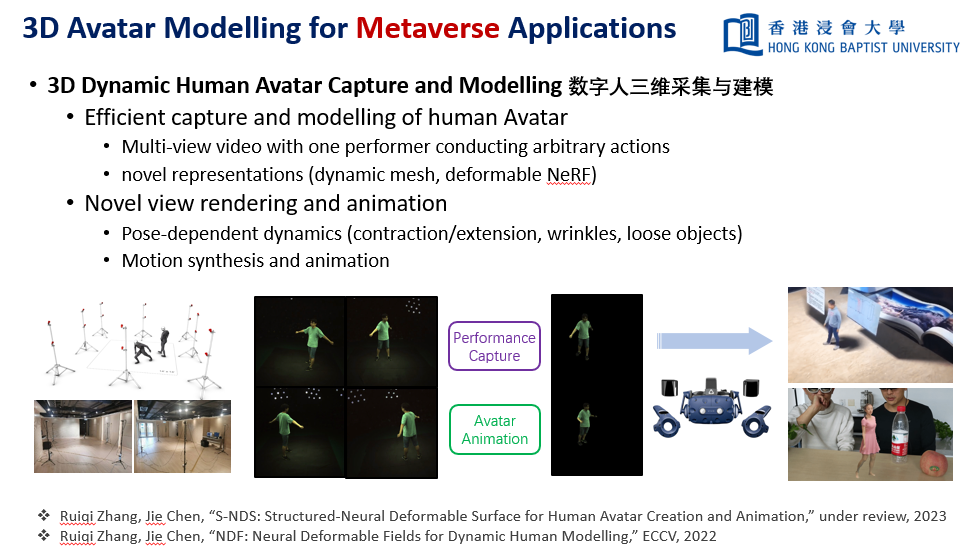

To model and to visualize 3D human shape and appearances is an important task for various metaverse applications such as immersive telepresence. Conventionally this is done by expensive multi-camera systems in the scale of 100 cameras. And we develop solutions to use a few video cameras to capture a short video of the target performer’s activities, and based on which we learn its dynamic volumetric representations, and use them directly for rendering and animation.